VexU Coprocessor

Twas the night before the World Championship and all through the house, the programmers were tuning autons

As is usually my role, I'm sort of the moral booster / voice of reason for the last minute crunch that the programming team has. I personally never really got invested in VexU; I always tended to do my own things. This past week, that was revisiting the co-processor problem described below. I've had some different success, and it seems like a good time to report on the changes.

Probably the biggest change is that all the weirdness had gone away. The randomly-disappearing bytes from last (and a couple prior) have disappeared. Who knows what the difference is; but it certainly made things a lot more enjoyable in terms of development. Now, we can assume that the USB connection will be reasonably stable for transmitting data back and forth. It is genuinely as simple as reading from stdin and writing to stdout on the Vex Brain. Of course, that complicates your ability to have debug print statements in the code. But it works very well now; with a really really slow implementation, I was pushing about 18 KiB/s of data.

The next problem is data structure. I decided I wanted to use a serialization service to marshall/unmarshall my data. I settled on using Flatbuffers. I had never worked with this library before but it focused on speed specifically by minimizing the number of copies needed for a particular data structure. Most importantly, its header only, which makes integrating it into Vex Code slightly less painful than otherwise (more on this later). Using Flatbuffers was a little confusing at first, but I got the hang of it eventually. This lead into needed to frame the buffer data. While the USB transmission is reliable; its not always clear where in the process you are. Moreover if you happened to loose a couple bytes, the whole system would get out of sync. I decided to null terminate my messages, which would allow me to recover if any bytes did get lost. This of course lead to needing the escape any null characters in the binary-encoded Flatbuffers. I reused most of the COBS code from last year.

Perhaps the hardest part was the testing. My time on a Vex Brain (the control computer on the robot) was limited by the fact that Team WHOOP had multiple robots all being used concurrently for programming and practice. I had to develop some systems to test without actually having the hardware. While the Vex Brain runs C++, the compiler is packaged with their own editor, Vex Code, and its rather restrictive on its feature set. For example, even though it uses the clang compiler, the user cannot pass arbitrary compile flags. Likewise, Vex Code looks in a particular directory structure for the files it needs. I managed to side step this with a mess of flags and files that ran in Linux. This allowed me to test the C++ portions with standard testing frameworks, such as Google Test and to run end-to-end tests by connecting the Python code to a dummy compiled file which emulated the robot. This allowed me to find most of the bugs before my very limited time with the actual robot hardware.

I also experimented with OpenCV for this game, as well as some DepthAI Cameras. The vision was a little harder this year than last, but very early processes showed promise.

In conclusion, this is a fun project and very much possible. Just give yourself more than four days. I'm excited to see what teams will do with the additional hardware.

This is the original summary. Some of it is now incorrect, but the overall ideas are still sound.

This project is on github: Link

I've been tangentially involved in VexU (team WHOOP) for many years while working with Aggie Robotics. Most of the time I'm around to hang out and tell people if thy're doing something that has no chance of working. However, due to how the schedule fell in 2021, I ended up getting really involved with the VexU team between the state championship and the world championship. Because of the pandemic, the world championship was scheduled for the middle of summer, which gave us six weeks between the end of the semester and the championship. I was working for Facebook at the time, but needed something fun to do at night once the work day ended.

I decided to tackle the problem of integrating the Vex hardware with an external co-processor. The VexU rules allow you to have pretty much any electronics you want as long as they don't produce motion (i.e. are not a motor). All the motion comes from motors running through the Vex V5 brain, a proprietary system developed by Vex. However, the V5 brain does have a usb-serial port. Its intended for debugging but data is data; certainly it can be used.

I was not the first member of Aggie Robotics to have this idea, nor was I the first to attempt to solve it. However, I was determined to be the one who solved it. I was warned of the major road block: some bytes would disappear. In short, sometimes (not always), any lowercase "p" (byte 112) sent would just disappear from the serial stream. I didn't believe it at first, but sure enough some 112s randomly vanished (and some "pp" became "p"). Armed with this knowledge, I did the only logical thing, made sure to never send a lowercase "p". I achieved this by writing an implementation of COBS which escaped all the 112s (as opposed to COBS usually escaping null characters). I tested it with a Python implementation of 112-COBS on a Raspberry PI, generated megabytes of data and sent it and echoed it back to see the result was unchanged. It worked.

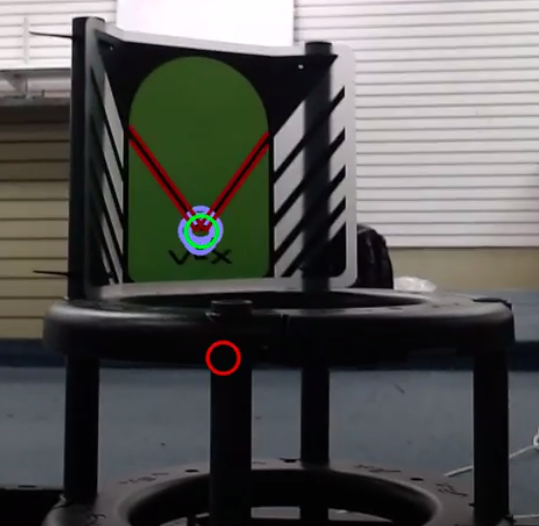

Armed with the knowledge that it was possible to stream data back and forth, I turned my attention trying to do something with this system. The 2021 Vex Game (Change Up) was clearly designed for the new VexAI competition. The goals had big vibrant green targets on top; the balls (game pieces) were massive red and blue blobs. I threw some computer vision at the problem and after some tuning was able to get decent results. I had two algorithms for detecting the goals. The first was to find the 'V' shape in the goal by finding lines at a certain angle to each other and the second was to try and do a template match of a low resolution sample image to anywhere in the camera image. Both worked, but the latter ran faster and had the option to have GPU acceleration on something like a Jetson Nano, if we could find space for it on the robot.

Thus all that was left was to do the integration step. This went surprisingly smooth. Based on the scale and position of the target in the camera image we could estimate our distance. We could then adjust our alignment and position and score the ball. Things were going very smoothly, until they weren't. One day, due to an update in who know what, the whole thing fell apart. Now, the serial connection would randomly drop. Sometimes it came back, other times no matter what we tried it was just gone until everything was unplugged and rebooted. Of course this was right around the time that my summer internship was picking up steam and the competition was days away. Not willing to pull the necessary all nighters to fix this, we cut our losses and it never ended up seeing any use in competition matches.

Included below are some images of the process. Likewise, there are two videos showing the two different goal detection methods. Things were working and showing a lot of promise; it was just too little too late. Its annoying that this never worked as it could have, especially since the game was so perfect for vision detection. However learning still occurred so it can't be all bad.

Goal Detect by looking for V shape